- #AUDACITY SPECTROGRAM CODE#

- #AUDACITY SPECTROGRAM PLUS#

- #AUDACITY SPECTROGRAM MAC#

- #AUDACITY SPECTROGRAM WINDOWS#

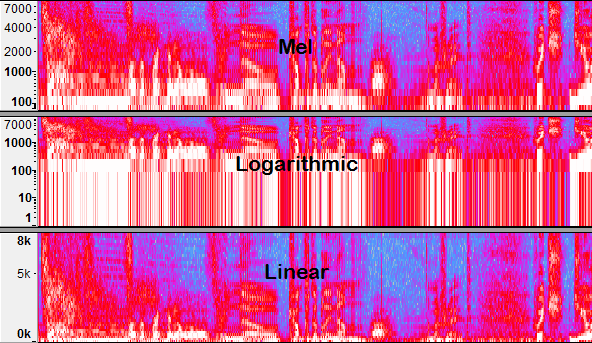

"Proper" plotting which sets the x and y axes of your plot to the time and frequency scales required to interpret the spectrogram. This will become important later on if you want to resolve things like formants.

#AUDACITY SPECTROGRAM CODE#

The windowing functionality (nothing to do with the length of the sliding window you apply in your code (1000)). So with the exception of a few details and optimisations here and there, you are down the right track with your code. With 16kHz you can resolve (theoretically) up to 8kHz. Therefore, higher true frequency resolution means capturing more data which means increasing your sampling frequency. Of course, your window size is not independent of your Sampling Frequency and there are limits to how many frequency components it can resolve given the data that has already been captured at sampling. A size 8 window is marked as "most wideband" and a size 32768 is marked as "most narrowband". The window size is inversely proportional to the resolution of the FFT. This is done to exploit the structure of the calculations in computing the FT and do it F(ast).

These are Audacity's parameters for deriving spectrograms:Īll of Audacity's window sizes are powers of 2. This will give the Fast Fourier Transform (FFT) the opportunity to resolve more frequencies. What you are lacking is in frequency resolution and the only way to increase this is to increase your window size. This 97% overlap is a very high temporal resolution.

#AUDACITY SPECTROGRAM WINDOWS#

Your signal is about 2 seconds long at a sampling frequency (Fs) of 16kHz and you are dividing it into windows of 1000 samples that overlap by 965 samples. the length of the transformed axis of the output is therefore n//2 + 1. On line 7 of your point #6, you make use of .įrom the documentation. how do I get better resolution and clarity so as to read stuff such as formants in vowels, i tried changing the size of my partitions, but still get very similar results, more windows get less data of frequencies in each window, and less windows get poor time resolution. It will be interesting to see how this clipping manifests itself in the frequency spectrum. Keep this recording and make another one where you are a little bit further away from the microphone to later compare the differences in the spectrogram. The amplifier is driven to its limits and it clips the output. Your amplification gain is set to too high, or you are too close to the microphone.

#AUDACITY SPECTROGRAM PLUS#

Plus I'd rather get to know the nitty gritties so I really understand what is going on. Note, I have tried python packages such signal.spectrogram, but the results are very poor aswell. I am very new to signal processing, so any help would be greatly appreciated, such as a point in the right direction or pointers as to what I'm doing wrong, in order to get clear spectrograms so as to study. but I've been watching youtube videos such as this one, how do I get better resolution and clarity so as to read stuff such as formants in vowels, i tried changing the size of my partitions, but still get very similar results, more windows get less data of frequencies in each window, and less windows get poor time resolution. So now I have a spectrogram, but of very poor quality. Plt.pcolormesh(audio_time_intervals, tempfreq, spectrogram2) Plot the spectrogram: audio_length = len(samples)/sample_rateĪudio_time_intervals = np.linspace(0,audio_length,999)

Spectrogram.append(list(frequencies_in_range)) Samples_in_range_fft = np.fft.rfft(samples_in_range)įrequencies_in_range = np.abs(samples_in_range_fft) Windows = np.linspace(0,len(samples),1000) This is what I guessed would be like, as vocal frequencies are typically around the range of 100 to a thousand, right?Ī spectrogram is when I divide my samples into a certain amount of windows, and then take the Fourier transform of each of the windows, resulting in a time frequency graph. Now I just do a quick plot of the samples:Īnyway, I carry on and as I understand it, I perform a Fourier transform that will take my signal, decompose it into the sine waves of different amplitudes and frequencies, and then get the real part of the complex numbers that are generated: samples_fft = np.fft.rfft(samples) Sample_rate, samples = wavfile.read('/path/to/computer.wav') $ ffmpeg -i computer.m4a -acodec pcm_s16le -ac 1 -ar 16000 computer.wav m4a, then I used ffmpeg converting it into.

#AUDACITY SPECTROGRAM MAC#

Me saying 'computer', was recorded on my mac in. So I've been self studying signal processing, and been trying to use Python to get quality spectrograms, quality spectrogram like the one that would be produced with audacity etc.

0 kommentar(er)

0 kommentar(er)